Анализ логов сайта с помощью Screaming Frog SEO Log File Analyser

В наше время немногие способны проанализировать логи сайта, а тем более вручную (как это делалось раньше). Но иногда это сделать необходимо и даже очень полезно, ведь работая с логами вы сталкиваетесь с первичной информацией: каждое посещение сайта роботом и человеком отображается в них полностью.

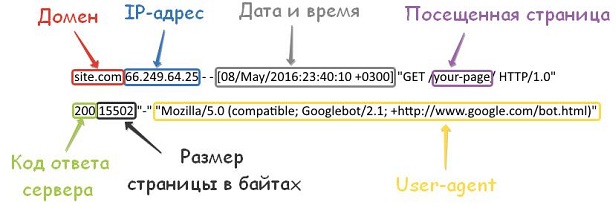

Что же они могут рассказать? Давайте рассмотрим на примере. Вот как выглядит одна строчка из журнала:

- Домен;

- IP-адрес, с которого был переход;

- точное время посещения запроса;

- сама страница, которая была посещена краулером;

- код ответа, с которыми сталкиваются и роботы, и люди;

- размер страницы в байтах;

- user-agent — имя клиента, который обратился к серверу, с помощью которого можно узнать какой браузер и робот обращался к странице.

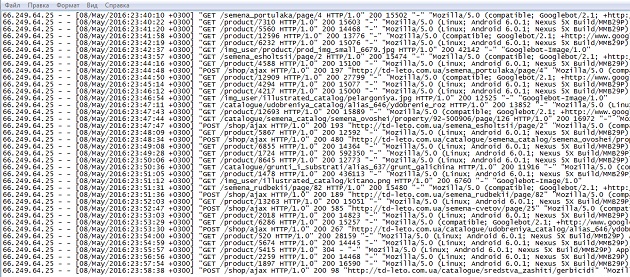

А теперь представьте, как выглядят логи, которые содержат не пару посещений, а несколько сотен, а то и тысяч посещений робота.

Очень сложно анализировать такие данные вручную, не правда ли?

Очень сложно анализировать такие данные вручную, не правда ли?

Я не говорю, что это невозможно. Вот только потратите на этот анализ вы очень много времени.

К счастью, сейчас уже есть масса инструментов для анализа логов сайта, как платных, так и бесплатных. Пересмотрев несколько из них, я остановилась на инструменте от Screaming Frog – SEO Log File Analyser. Именно ему и посвящен мой сегодняшний обзор.

Инструмент платный: 1 лицензия – 99$ в год. Но есть и бесплатная версия, которая имеет некоторые ограничения:

- 1 проект;

- Всего 1000 строк в журнале событий;

- Отсутствует бесплатная техническая поддержка.

Узнать более подробную информацию и скачать программу можно здесь.

Если думаете, что программа сделает все за вас, то вы ошибаетесь. Да, она сделает большую часть работы, т.е. соберет все данные в кучу и представит их в удобном и понятном виде. А вам останется самая главная задача – проанализировать эти данные, сделать выводы и составить рекомендации.

Что же все-таки делает этот инструмент?

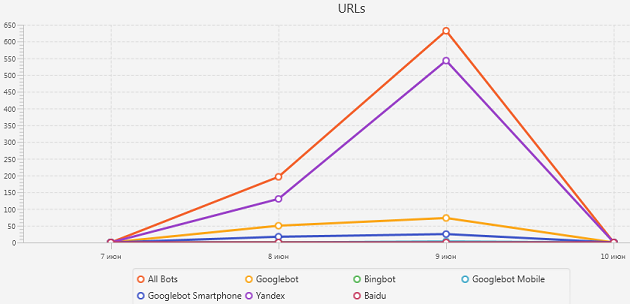

- Определяет просканированные URL: узнайте, какие именно ссылки Googlebot и другие поисковые роботы могут сканировать, когда и как часто.

- Показывает частоту сканирования: узнайте какие страницы поисковые роботы посещают наиболее часто и сколько URL-адресов сканируются каждый день.

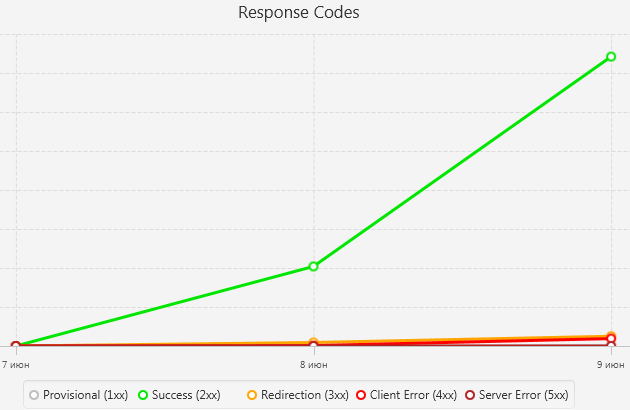

- Находит неработающие ссылки и ошибки: показывает все коды ответов, неработающие ссылки и ошибки, с которыми роботы поисковых систем столкнулись при сканировании сайта.

- Проверяет наличие редиректов: находит временные и постоянные редиректы, с которыми сталкиваются поисковые роботы.

- Помогает оптимизировать краулинговый бюджет: покажет ваши наиболее и наименее часто просканированные URL, с целью выявить потери и повысить эффективность сканирования.

- Находит неотсканированные урлы и страницы-сироты: можно импортировать список URL-адресов и сравнить их с данными логов для выявления неизвестных страниц или URL-адресов, которые Googlebot не в состоянии обработать.

- Позволяет объединить и сравнить любые данные.

Итак, приступим. Думаю, с установкой программы у вас не возникнет проблем. Поэтому переходим к основному этапу – загрузке данных.

Импортировать можно 2 вида данных:

- лог-файлы;

- список URL (в excel формате).

Для начала, расскажу, как импортировать файлы логов.

Прежде всего, вам потребуется достать с сервера лог-файлы (access.log) вашего сайта. Они часто хранятся в папке /logs/ или /access_logs/, и через FTP вы можете загрузить эти файлы на ваш компьютер.

Для того, чтобы у вас было достаточно данных для анализа, я бы порекомендовала использовать лог-файлы за месяц.

После того как вы их скачали просто нажимаете кнопку «Import» и выбираете пункт «Log file».

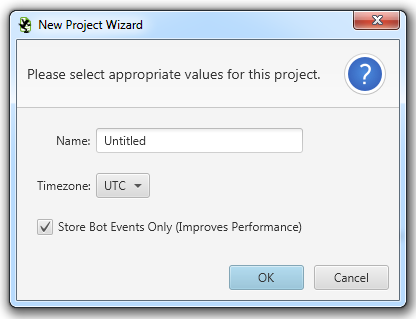

Далее после загрузки данных, вам нужно будет придумать название проекту (тут делайте как удобно, главное чтоб потом было понятно) и выбрать часовой пояс.

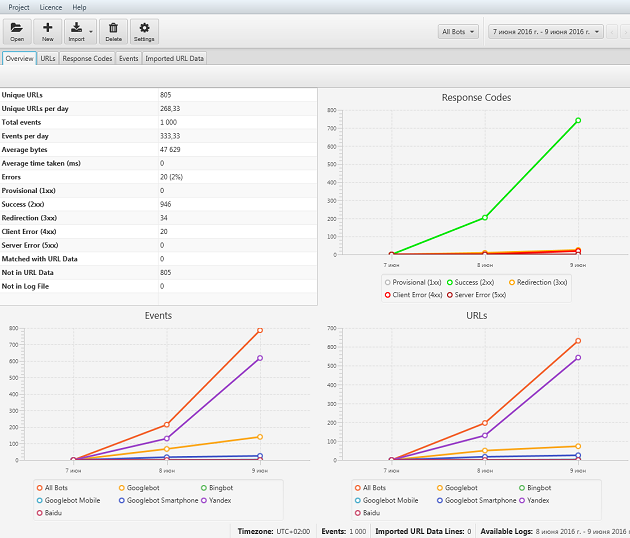

После этого появится главное окно панели управления:

После этого появится главное окно панели управления:

Здесь вы можете сразу увидеть, какие поисковые роботы заходили на ваш сайт и как часто, сколько страниц они посещают и т.д.

Здесь вы можете сразу увидеть, какие поисковые роботы заходили на ваш сайт и как часто, сколько страниц они посещают и т.д.

После этого вы можете переходить к более глубокому анализу. Например, можно определить, какие URL-адреса наиболее медленно загружаются, а какие очень часто посещаются.

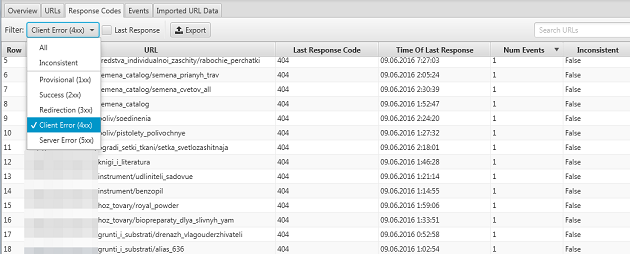

Также можно увидеть какие коды ответов отдают ссылки. Для этого переходите на вкладку «Response Codes», где у вас есть возможность отфильтровать урлы по типу кода. Таким образом, вы можете найти ошибки.

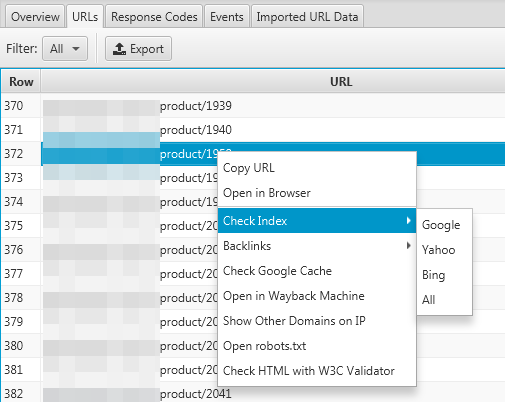

А также я обнаружила интересную штуку ☺ При нажатии правой кнопкой на ссылку появляются такие возможности:

А также я обнаружила интересную штуку ☺ При нажатии правой кнопкой на ссылку появляются такие возможности:

- Скопировать ссылку;

- Открыть урл в браузере;

- Проверить на наличие в индексе ПС (Google, Yahoo, Bing);

- Просмотреть внешние ссылки, ведущие конкретно на эту страницу;

- Открыть файл robots.txt и др.

Полный список вы можете увидеть на следующем скриншоте:

Скопировать и открыть ссылку это конечно стандартные вещи. А вот проверить на наличие в индексе или одной кнопкой перейти к robots.txt – это уже интересно. А главное, очень удобно.

Скопировать и открыть ссылку это конечно стандартные вещи. А вот проверить на наличие в индексе или одной кнопкой перейти к robots.txt – это уже интересно. А главное, очень удобно.

Теперь расскажу о втором способе импорта – загрузке списка URL в excel формате. Зачем это нужно подумаете вы?

В программе есть возможность импортировать URL-адреса из различных источников и объединить их с файлом логов для более сложного анализа. После того как вы их импортировали программа может показать URL-адреса, которые совпадают или не совпадают в этих наборах данных.

При таком сравнении вы можете проанализировать сайт для целого ряда различных вопросов:

- Какие страницы на вашем сайте посещаются чаще всего? Или какие страницы не посещались роботом вообще?

- Все ли URL-адреса из XML-карты сайта робот посетил? Если нет, то почему?

- Как часто обновления карты сайта проверяются роботом?

- При изменении страницы, сколько времени пройдет от повторного обхода до момента, когда индекс поиска обновится?

- Как влияет новые ссылки на скорость сканирования?

- Как быстро вновь запущенный сайт или раздел сайта будет просканирован?

И многие другие вопросы.

Потенциал для анализа практически не ограничен. Сравнивая любые данные, вы можете получить значимое представление о взаимодействии вашего сайта с поисковыми роботами. А это, в свою очередь, позволяет обнаружить проблемы на вашем сайте, которые другие SEO-инструменты не смогли бы так просто найти.

Итак, в целом можно сказать, что файлы логов содержат огромное количество информации, которая может помочь вам проанализировать производительность вашего сайта и избежать некоторых ошибок. А Screaming Frog SEO Log File Analyser поможет вам в этом анализе.

Руководитель SEO-отдела маркетингового агентства MAVR. 4 года опыта в SEO.

Имеет опыт в сферах: beauty, спорт, рекламные услуги и т.д.

Количество проектов: более 94